Grok 3 Advances, Language-Diffusion Fusion, Murati’s Vision, Summit Takeaways | AI Dial-Up

There were some big moves by some of the major players in the AI space this past week. Let’s dive into the key stories shaping the future of artificial intelligence.

🚀 Grok 3 Pushes Boundaries of AI Reasoning

xAI has unveiled Grok 3, marking a significant leap in AI reasoning capabilities. Trained on the company’s massive Colossus supercluster with 10x the compute of previous state-of-the-art models, the system demonstrates unprecedented performance across technical benchmarks. According to Yahoo Finance, this release represents xAI’s “most advanced model yet,” combining extensive pretraining knowledge with sophisticated reasoning capabilities.

The model family includes both Grok 3 and a more cost-efficient Grok 3 mini variant, achieving remarkable results:

- 93.3% on AIME’25 (American Invitational Mathematics Examination)

- 84.6% on GPQA (graduate-level expert reasoning)

- 79.4% on LiveCodeBench for code generation

What sets Grok 3 apart is its flexible reasoning modes (Think, Big Brain, DeepSearch) and massive 1M token context window, enabling it to process extensive documents while maintaining instruction-following accuracy. The system is currently rolling out to X Premium+ subscribers, with API access planned for the near future.

💡 Language-Diffusion Models Could Mark a New LLM Architecture

In a groundbreaking development, researchers have introduced LLaDA, an 8B parameter diffusion model that challenges traditional autoregressive approaches to language modeling. The model’s novel masked diffusion approach represents a fundamental shift in how large language models are constructed.

LLaDA’s training process involved:

- 2.3 trillion token pretraining phase

- 4.5 million instruction tuning samples

- Innovative bidirectional reasoning capabilities

- Competitive performance with LLaMA3 8B across benchmarks

Particularly noteworthy is LLaDA’s ability to break the “reversal curse” that typically plagues autoregressive models, showing more consistent performance in both forward and backward reasoning tasks. The model demonstrates exceptional scaling trends, particularly in mathematical reasoning tasks, suggesting a promising future for diffusion-based approaches in language modeling.

👤 Mira Murati: Shaping the Future of Human-AI Collaboration

The Verge reports that former OpenAI CTO Mira Murati has launched Thinking Machines Lab, assembling an impressive team of AI leaders. The venture represents a significant shift in AI development philosophy, emphasizing human-AI partnership over autonomous systems.

The company has attracted top talent, including:

- John Schulman (OpenAI co-founder) as Head of Research

- Barrett Zoph as CTO

- Several key engineers and researchers from OpenAI’s special projects team

Murati’s vision focuses on making AI systems more understandable and customizable, with a commitment to transparency through published research and open-source code.

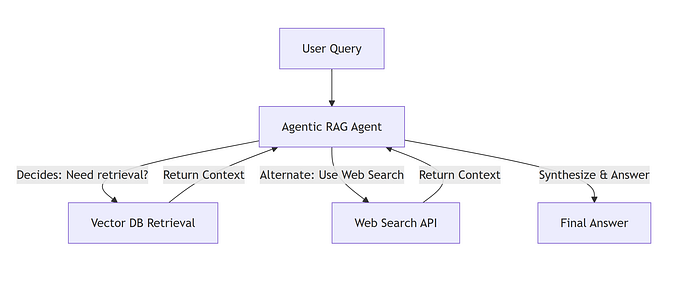

🎯 AI Engineer Summit 2025 Highlights

Recent insights from the AI Engineer Summit emphasize practical implementation strategies for AI systems. Anthropic’s Alexander Bricken highlighted the critical importance of evaluation systems, stating “Evals are your company’s intellectual property.”

Key takeaways include:

- Building robust evaluation systems before considering fine-tuning

- Developing representative test cases and comprehensive telemetry

- Including edge cases in testing protocols

- Leveraging existing tools (prompt engineering, context retrieval, caching)

- Treating fine-tuning as a last resort rather than a default solution

🔮 Looking Ahead

The week’s developments suggest several key trends:

- Continued investment in massive compute resources drives AI capabilities

- Novel architectures challenge traditional approaches to language modeling

- Human-centric AI development gains momentum under veteran leadership

- Industry focus shifts toward systematic evaluation and practical deployment

These advances collectively point toward a future where AI systems become more capable, understandable, and practically applicable across various domains.

Thank you for reading this weeks AI Dial-Up! I’d love to connect on LinkedIn or X and chat about what we’re building. Cheers!

References

2. xAI’s Grok 3 Launch Details

3. Large Language Diffusion Models